客户端码农学习ML —— 使用LinearRegressor实现线性回归

最近看了Google官方机器学习教程,跟着练习了部分示例,其中《使用 TensorFlow 的起始步骤》采用了LinearRegressor配合Pandas来进行线性回归训练。

于是使用两者重新写了一个版本的线性回归训练,数据也从之前python直接生成模拟数据改成了从csv文件读取,而csv文件来源于Excel: A列的100行等于1至100的序列, B=A*5+50+RANDBETWEEN(-10, 10)。

读取数据集及特征准备

1 | import numpy as np |

训练

1 | my_optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.0001) |

结果评估

1 | predict_input_fn = lambda: my_input_fn(my_feature_dataframe, target_series, num_epochs=1, shuffle=False) |

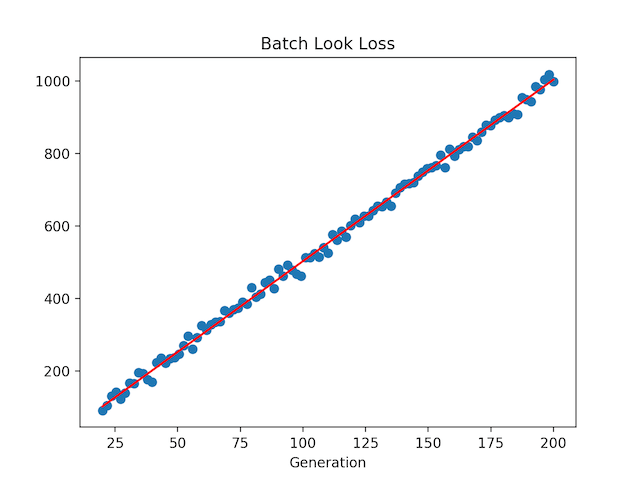

结果可视化

1 | def show_visualization_data(x_data_array, y_data_array, w, b, loss_vec, title=None): |

可以得到与上一篇《客户端码农学习ML —— 用TensorFlow实现线性回归算法》文中相似的图片:

参考

https://developers.google.cn/machine-learning/crash-course/prereqs-and-prework

https://colab.research.google.com/notebooks/mlcc/first_steps_with_tensor_flow.ipynb?hl=zh-cn

本文首发于钱凯凯的博客 : http://qianhk.com/2018/02/客户端码农学习ML-使用LinearRegressor实现线性回归/

发布于